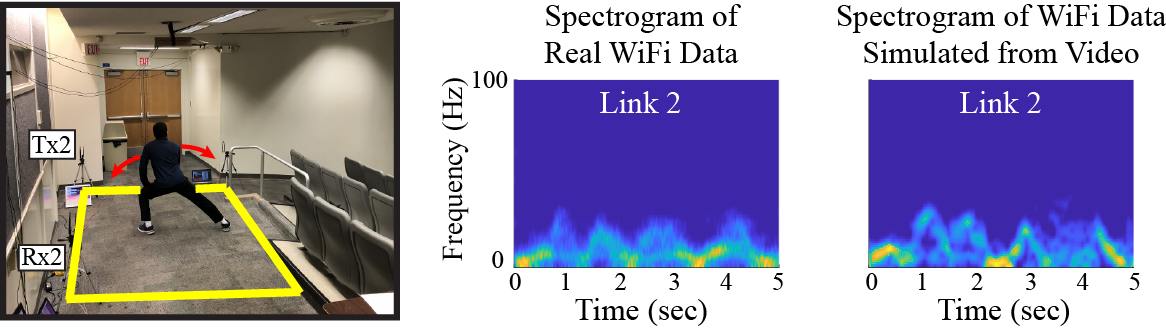

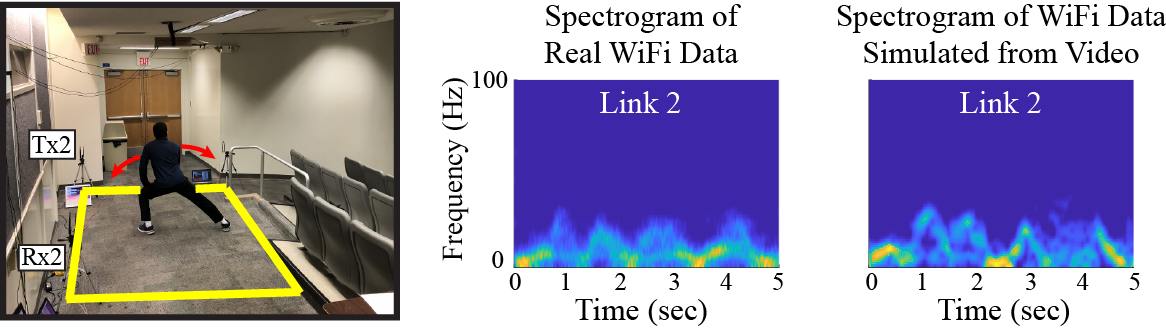

In this website, we make publicly available sample data and codes for converting a given video footage to instant RF signals, a technique we proposed in our IMWUT20 paper [1]. Consider a video footage of a person engaged in an activity. In this paper, we have shown how one can generate the RF signals that would have been measured by an RF transceiver placed in the vicinity of the person in the video. This method can then be used for training any human-motion-based RF sensing system, in any given setup, with no need to collect any RF training data, and by solely using available videos of relevant activities for training.

Here is a brief summary of the approach. We first extract a 3D human mesh model of the person in the video, and then use this extracted mesh to simulate the RF signal that would have been measured if this person was in an RF-covered area. We have further showcased one application of this technique through a sample case study, in which we trained a WiFi-based gym activity classifier, by solely using available online videos of gym activities. For questions or comments regarding this dataset, please contact Belal Korany.

[1] H. Cai*, B. Korany*, C. R. Karanam*, and Y. Mostofi, "Teaching RF to Sense without RF Training Measurements," in proceedings of ACM on Interactive, Mobile, Wearable, and Ubiquitous Technologies (IMWUT), Vol. 4, Issue 4, 2020. [pdf] (*equal contribution)

Another application scenario for this technique can be found in our MobiCom19 paper [2], where we showed how WiFi can identify a person from a given video footage. Other potential applications of this technology are also discussed in both papers.

[2] B. Korany*, C. R. Karanam*, H. Cai*, and Y. Mostofi, "XModal-ID: Using WiFi for Through-Wall Person Identification from Candidate Video Footage," 25th Annual International Conference on Mobile Computing and Networking (MobiCom), Oct. 2019. [pdf] (*equal contribution)

In this site, we release sample data/codes for translating video data to instant RF data in the context of gym activities. More specifically, sample videos of a person engaged in different gym activities, as well as the codes used to simulate the WiFi data from these videos, can be downloaded from the following link:

Details about the data format and the code usage can be found in the ReadMe document in the downloaded folder. If you have any questions or comments regarding the data and/or the codes, please contact Belal Korany.

The code/data are owned by UCSB and can be used for academic purposes only.

If you have used this data and/or code for your work, please refer the readers to this website at its DOI address: https://doi.org/10.21229/M93M27, and also cite the following paper:

H. Cai, B. Korany, C. R. Karanam, and Y. Mostofi, Teaching RF to Sense without RF Training Measurements," in proceedings of the ACM on Interactive, Mobile, Wearable, and Ubiquitous Technologies (IMWUT), Vol. 4, Issue 4, 2020.

@article{CaiKoranyKaranamMostofi2020,

title={Teaching RF to Sense without RF Training Measurements},

author={Cai, H. and Korany, B. and Karanam, C. R. and Mostofi, Y.},

journal={Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies},

volume={4},

number={4},

pages={1--22},

year={2020},

publisher={ACM New York, NY, USA}

}

We provide sample videos of three different gym activities out of the set of activities described in [1]. More specifically, the data set contains two videos of each of the following exercises: 1) lateral lunges, 2) sit-ups, and 3) stiff-leg deadlifts.

The video frames can be found in the subfolder "video_frames". See the ReadMe file in the provided folder for more details.

The first part of the conversion pipeline is to extract the 3D mesh of the person in the video. This is done based on the Mask-RCNN system (Ref. [3]) and the Human Mesh Recovery (HMR) system (Ref. [4]).

The codes of these systems are publicly available here and here. We also provide a copy of these codes in the subfolders "MASK_RCNN" and "human mesh recovery". They run on Ubuntu and require a CUDA GPU.

The provided script "get_mask_mesh.sh" inputs the video frames to these systems and saves the generated meshes in the subfolder "video_meshes".

The final step is to simulate the RF signal that would have been measured by RF transceivers in the vicinity of the person in the video. The code for this RF simulation step is implemented in MATLAB, and can be found in the provided MATLAB script "generate_wifi_from_video.m". The code takes as input the extracted meshes (saved in the "video_meshes" subfolder), simulates the WiFi signals using Born approximation, generates the spectrogram of the WiFi signals, and saves the spectrogram in the "simulated_spectrograms" subfolder. See the ReadMe file and the comments within the MATLAB code for more information on the code implementation.