(*equal contribution)

Summary of Our Approach

In this paper, we propose XModal-ID, a novel video-WiFi cross-modal gait-based person identification system. Given the WiFi signal measured outside of an area behind the wall, and a video footage of a person in another area, XModal-ID can determine if the person in the video is in the WiFi area on the other side of the wall. Consider Figure 1 (left) for instance, where a pair of WiFi transceivers are inserted outside of an area. Then, given the video footage on the right, XModal-ID can decide if the person in the video is on the other side of the wall of the left figure, by using only the received power measurements of the WiFi link.

|

Figure 1. (left) A pair of WiFi transceivers are inserted outside. The transmitter sends a wireless signal whose received power (or magnitude) is measured by the receiver. Then, given the video footage on the right — and by using only such received power measurements — XModal-ID can determine if the person behind the wall of the left figure is the same person in the video footage. |

Identifying a person through walls from candidate video footage is a considerably challenging problem. Here is our underlying idea. The way each one of us move is unique. In other words, as a person moves, the way different body parts move with respect to each other can be a unique identifier of the person. But how do we properly capture and compare the gait information content of the video and WiFi signals to establish if they belong to the same person?

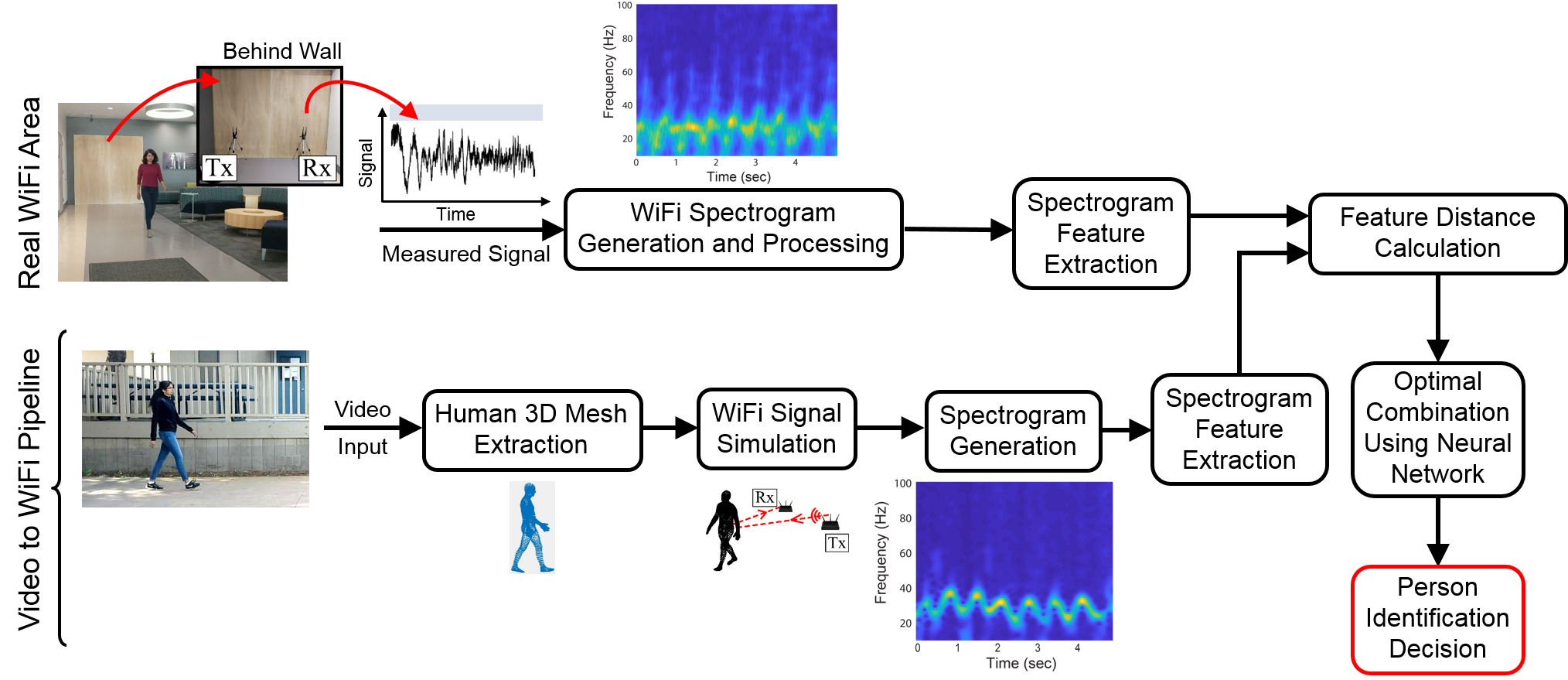

Our proposed approach is multi-disciplinary, drawing from both areas of wireless communications and vision. The main steps involved in XModal-ID are shown in the flowchart of Figure 2, and briefly explained next. Given a video footage, we first utilize a human mesh recovery algorithm to extract the 3D mesh describing the outer surface of the human body as a function of time. We then use Born electromagnetic wave approximation to simulate the RF signal that would have been generated if this person was walking in a WiFi area.

Next we propose a time-frequency processing pipeline to extract key gait features from both the real WiFi signal (that was measured behind the wall) and the video-based simulated one. More specifically, we utilize a combination of Short-Time Fourier Transform and Hermite functions to generate a spectrogram for both signals. A spectrogram carries the frequency-time content of the signal, which implicitly carries the gait information of the person. We then extract a set of 12 key features from both spectrograms and calculate the distance between the corresponding features. These feature distances are then fed into a small 1-layer neural network that optimally combines them so XModal-ID can determine if the person in the video is the same person behind the wall. This small neural network is used to find the optimum weights for combining the feature distances (since some features may be more important than others). It is noteworthy that this 1-layer neural network is trained on a small data set completely disjoint from the real operation people or operation areas.

|

| Figure 2. Key components of our proposed XModal-ID pipeline. |

Sample Experimental Results

We have run several experiments on our campus to validate the proposed framework. In these experiments, XModal-ID identifies if the person behind the wall is the same as the one in a candidate video footage, using only the received signal magnitude of a pair of WiFi transceivers. Our proposed approach does not require prior WiFi or video training data of the person to be identified. XModal-ID also does not need any knowledge of the operation area or person's track. Moreover, the WiFi and video areas are not the same. We next show sample results.

Through-Wall Experiment Area 1:

In this experiment, a pair of WiFi transceivers are placed outside of a hallway area, behind a wall, as illustrated in Figure 3 (left). XModal-ID then determines if the person walking in this hallway is the same as the person in a candidate video footage (a sample candidate video footage is shown in the right figure). We have tested XModal-ID with a total of 384 WiFi-video pairs from a pool of 8 people in this area. XModal-ID achieves a through-wall identification accuracy of 83% in this area.

|

| Figure 3. (left) A pair of WiFi transceivers are placed behind the wall where a person is walking on the other side in the hallway. (right) A sample candidate video footage of a person walking. XModal-ID then decides if the person in the video is behind the wall. We have tested XModal-ID with 384 WiFi-video pairs from a pool of 8 people in this area and achieved an identification accuracy of 83%. |

Through-Wall Experiment Area 2:

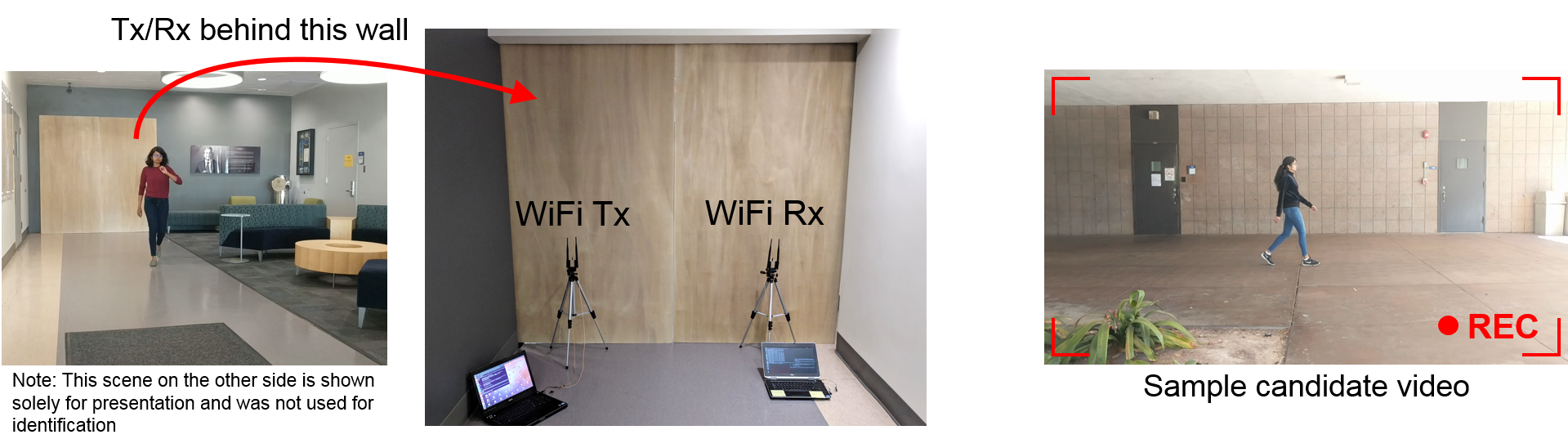

In this experiment, a pair of WiFi transceivers are placed outside of a lounge area, behind a wall, as illustrated in Figure 4 (left). XModal-ID then determines if the person walking in this lounge is the same as the person in a candidate video footage (a sample candidate video footage is shown in the right figure). We have tested XModal-ID with a total of 744 WiFi-video pairs from a pool of 8 people in this area. XModal-ID achieves a through-wall identification accuracy of 82% in this area.

|

| Figure 4. (left) A pair of WiFi transceivers are placed behind the wall where a person is walking on the other side in the lounge. (right) A sample candidate video footage of a person walking. XModal-ID then decides if the person in the video is behind the wall. We have tested XModal-ID with 744 WiFi-video pairs from a pool of 8 people in this area and achieved an identification accuracy of 82%. |

Through-Wall Experiment Area 3:

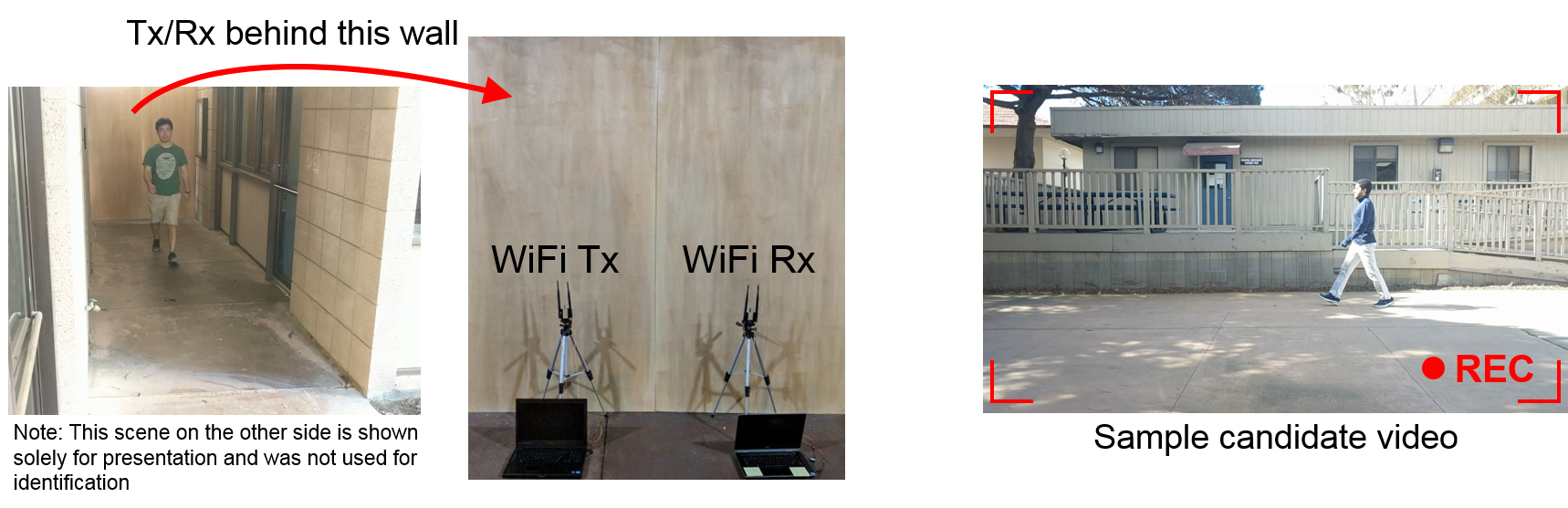

In this experiment, a pair of WiFi transceivers are placed outside of another area, behind a wall, as illustrated in Figure 5 (left). XModal-ID then determines if the person walking on the other side is the same as the person in a candidate video footage (a sample candidate video footage is shown in the right figure). We have tested XModal-ID with a total of 360 WiFi-video pairs from a pool of 8 people in this area. XModal-ID achieves a through-wall identification accuracy of 89% in this area.

|

| Figure 5. (left) A pair of WiFi transceivers are placed behind the wall where a person is walking on the other side. (right) A sample candidate video footage of a person walking. XModal-ID then decides if the person in the video is behind the wall. We have tested XModal-ID with 360 WiFi-video pairs from a pool of 8 people in this area and achieved an identification accuracy of 89%. |

Additional Experiments:

We have also tested XModal-ID in non-through-wall scenarios in two areas, with a total of 768 WiFi-video pairs, drawn from a pool of 8 people. XModal-ID achieves an overall identification accuracy of 88% in this setting.

Potential Applications

There are several potential applications that can benefit from XModal-ID. Two such broad sets of applications are:

Security and Surveillance: Consider the scenario where the video footage of a crime scene is available and the police is searching for the suspect. A pair of WiFi transceivers outside a suspected hide-out building can use XModal-ID to determine, from outside, if the person in the crime video is hiding inside. Moreover, the existing WiFi infrastructure of public places can further be used to to detect the presence of the suspect, by referencing the crime-scene video footage.

Personalized Services: Consider a smart home, where each resident has personal preferences (e.g., lighting, music, and temperature). The home WiFi network can use XModal-ID and one-time video samples of the residents to identify a person walking in an area of the house and activate his/her preferences, without the need to collect wireless/video data of each resident for training purposes. New residents can also be easily identified without a need for retraining.

Acknowledgements

All the students who walked in our experiments.