CE Capstone - Projects

2017-2018

HoverHand

Members

Austin Dorotheo

Colin Garrett

Miclos Lobins

Steven Fields

Zach Meyer

Description

The UCSB Hover Hand Team goal is to allow control of a quadcopter drone with the hand. Recording of the hand may result in a more intuitive and precise method of control over the common RC controller. The glove shall act as the transmitter to the drone's receiver, relaying the chief flight controls. Recording hand movement in a way that allows the position and orientation to be accurately and meaningfully described is another important aspect to the glove's operation. There are 6 key methods of motion control in a quadcopter's flight: Forward/back, left/right, pitch (forward/back rotation), roll (left/right rotation), throttle, and yaw (horizontal rotation). These 6 types of movement must be mapped as hand movements or gestures in such a way that allows seamless control of the quadcopter. In order to model hand movements and gestures, sensors must be placed on the fingers and on the top of the hand.

Resources

Sponsors

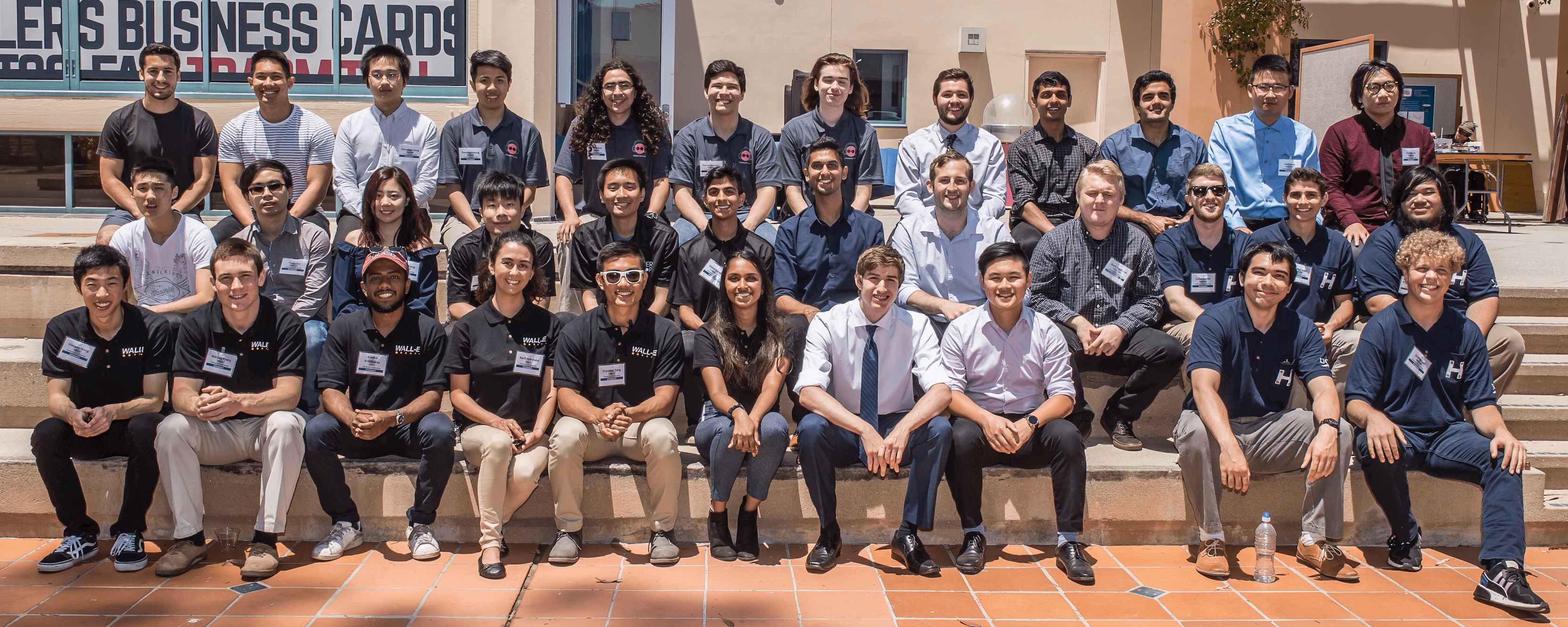

Hyperloop

Members

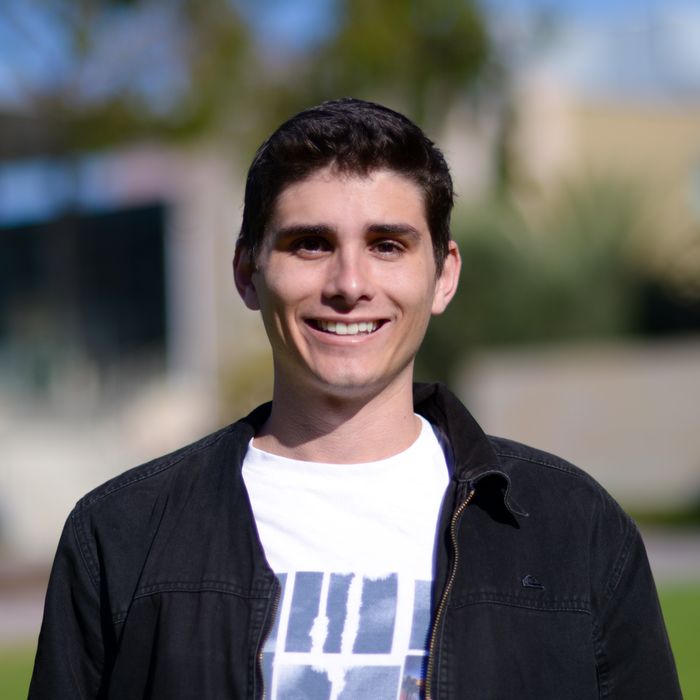

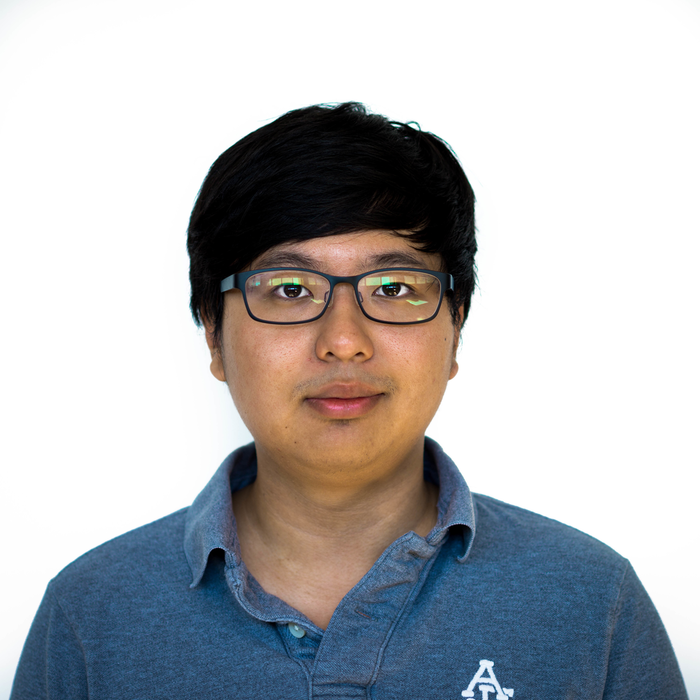

Alex Jun

Cameron Bijan

David Donaldson

Dylan Vanmali

Mark Wu

Description

The hyperloop is a proposed advanced method of high-speed transportation between cities that are less than about 900 miles apart (e.g. Los Angeles and San Francisco). The system uses a capsule that is propelled through a low-pressure steel tube as it rests on frictionless air bearings or magnets. The Embedded Systems Team monitors the UCSB Hyperloop Pod's physical metrics through an accurate sensor network and operates mechanical systems utilizing a web application and various actuators. This is a UCSB College of Engineering multidisciplinary project encompassing the Computer Engineering, Electrical Engineering and Mechanical Engineering capstone students.

Resources

SPOT

Members

Brandon Pon

Bryan Lavin-Parmenter

Neil O'Bryan

Saurabh Gupta

Description

SPOT is an experimental human communication interface designed for astronauts in conjunction with a human-computer interaction team at NASA. The goal of SPOT is to reduce an astronaut's cognitive load while maximizing productivity during future space exploration missions. SPOT accomplishes this goal by reducing the reliance on vocal communication for distribution of information between astronauts with an easy to use wireless forearm mounted wearable device. This device is capable of real-time transmission of vital information such as alerts, location, and images.

Resources

Sponsors

WALL-E

Members

Franklin Tang

Karli Yokotake

Karthik Kribakaran

Veena Chandran

Vincent Wang

Wesley Peery

Description

Ostracods are tiny crustaceans that create luminous courtship displays. WALL-E is a submersible low-light camera that can be deployed to analyze these patterns using computer vision techniques. WALL-E is a two-part project: the hardware setup to effectively capture footage, and the computer vision pipeline to extract 3D points from ostracod footage.

Resources

Sponsors

GauchoHawk

Members

Jack Zang

Kurt Madland

Richard Young

Vikram Sastry

Yesh Ramesh

Description

The project goal is to expand upon the capability of the PixHawk by adding functionality for inertial guidance, state estimation, a direct high-speed data transfer interface, accurate GPS reference, and a precise time source for time-stamping data whenever GPS is unavailable. This will be achieved through the development of a 'shield' daughterboard which will interface with the STM Nucleo processor pins and all newly added systems. In addition, to deploy the PX4 autopilot on the board, we will port the NuttX operating system onto the board.

Resources

Sponsors

TiresiaScope

Members

Brian Young

Devon Porcher

John Bowman

Timothy Kwong

Trevor Hecht

Description

TiresiaScope's main objective is to produce a headset for the blind, which will use ranging sensors to detect nearby objects around the user, and a surround-sound system to pinpoint the object's locations to the user. Potentially, the headset will also feature a camera system connected by WiFi to a server to recognize and read aloud nearby signs. A 360-degree array of ranging sensors will identify distances to nearby objects. Multiple sensor types, such as ultrasonic and infrared, will provide better coverage. The processor will map these nearby objects, and translate the map into binaural sound information. This sound information will identify direction using simulated surround-sound and distance using scaling musical notes. This information will be sent to the headphones.

Resources

Sponsors

DeepVision

Members

Charlie Xu

Chenghao Jiang

Jenny Zheng

Terry Xie

Description

One popular technology used on many drones is tracking, which tracks a certain target in real time by analyzing the video recorded by the drone's camera. However, tracking under many different situations is difficult because of the various actions that the target could take and the possibilities of the target being blocked. Deep Vison aimed to implement an algorithm on embedded system that features neural networks based target tracking for drones. Using Machine leaning and neural networks with computer vision, computer algorithm implemented on embedded systems can constantly learn from the dataset, and then achieve high accuracy tracking to solve this problem. The designed system will join the 2018 DAC System Design Contest. Our team will use the training dataset provided by the industry sponsor DJI to develop a object tracking algorithm based on neural networks and deep learning. The design will then be evaluated by a hidden dataset in terms of processing speed, tracking accuracy and power consumption in the contest. Our team also implemented the algorithm on the embedded system that can communicate with the drone in real time.<br> DeepVision group was ranked 10 out of 53 teams competing in DAC 1018 System Design Contest.