Menu:

Behrooz Parhami's ECE 254B Course Page for Winter 2021

Adv. Computer Architecture: Parallel Processing

Page last updated on 2021 March 21

Enrollment code: 13185

Prerequisite: ECE 254A (can be waived, but ECE 154B is required)

Class meetings: None (3-4 hours of pre-recorded lectures per week)

Instructor: Professor Behrooz Parhami

Open office hours: MW 10:00-11:00 (via Zoom, link to be provided)

Course announcements: Listed in reverse chronological order

Course calendar: Schedule of lectures, homework, exams, research

Homework assignments: Five assignments, worth a total of 40%

Exams: None for winter 2021

Research paper and poster: Worth 60%

Research paper guidlines: Brief guide to format and contents

Poster presentation tips: Brief guide to format and structure

Policy on academic integrity: Please read very carefully

Grade statistics: Range, mean, etc. for homework and exam grades

References: Textbook and other sources (Textbook's web page)

Lecture slides: Available on the textbook's web page

Miscellaneous information: Motivation, catalog entry, history

Course Announcements

2021/03/21: The winter 2021 offering of ECE 254B is officially over and grades have been reported to the Registrar. Wishing you an enjoyable and safe spring break and successful navigation through the rest of your program of study at UCSB.

2021/03/21: The winter 2021 offering of ECE 254B is officially over and grades have been reported to the Registrar. Wishing you an enjoyable and safe spring break and successful navigation through the rest of your program of study at UCSB.

2021/03/17: I have received the final research papers from all students and will begin evaluating them tomorrow. Grades for the papers, technical feedback, and the overall course grades should be sent out by Tuesday 3/23.

2021/03/08: You are now done with homework assignments for the course. Only the research component remains to be satisfied. The deadline for submitting your research presentation poster, in PDF format, is W 3/10 (any time). We will not have actual presentations, so preparing the poster is just a way of practicing to present your research results.

In addition to submitting your research poster on 3/10, please also include it as an appendix to your completed PDF paper, which will be due on Wednesday, March 17 (any time). This will allow me to deal with a single document in assessing all aspects of your research. The March 17 deadline is firm, because I will need about a week for careful reading and comparative assessment of your research papers, before my 3/23 grading deadline.

A possible venue for publishing your research paper is the 32nd IEEE Int'l Conf. Application-Specific Systems, Architectures, and Processors (ASAP 2021), held virtually from July 7 to July 9, 2021. Abstract submission deadline is March 29 and full papers are due April 5.

I will be talking in an upcoming IEEE Central Coast Section Zoom meeting, as an IEEE Distinguished Lecturer, under the title "Eight Key Ideas in Computer Architecture from Eight Decades of Innovation": Wednesday, March 17, 2021, 6:30 PM PDT. [Registration link (free for everyone)]

IEEE Central Coast Section is also sponsoring a Video-Essay Contest for students with cash prizes, but this one is limited to IEEE student members. [Details & Registration]

A just-published article in Communications of the ACM, March 2021 issue, entitled "The Decline of Computers as a General-Purpose Technology," argues that "technological and economic forces are now pushing computing away from being general-purpose and toward specialization." This may be a good general reference for the introduction section of your research paper.

I will maintain my MW 10:00-11:00 AM Zoom office hours through the end of the quarter (March 17).

2021/03/01: All course lectures and homework assignments have already been posted and lecture slides have been updated; nothing new will be forthcoming. HW5 will be due on Monday, March 8, 10:00 AM PST. Today was the deadline for submitting the final list of references and a provisional abstract for your research project. I have already received all submissions and found them to be acceptable, with feedback provided in a couple of cases. The next research milestone is submission of your research presentation poster by Wednesday, March 10 (any time). We will not have actual presentations, so preparing the poster is just a way of practicing to present your research results. In addition to submitting your research poster on 3/10, please also include it as an appendix to your completed paper, which will be due on Wednesday, March 17 (any time). This will allow me to deal with a single document in assessing all aspects of your research.

2021/02/22: HW5, the last one for the course, has been posted to the homework area below a few days ahead of schedule. Also, links to Lectures 15 & 16, the last two for the course, have been posted within the course schedule. March 1 is the deadline for submitting your final list of references and a provisional abstract for your research paper. The provisional abstract reflects your current understanding of what will appear in the paper and can be changed later to reflect the actual course of your research and the results obtained. Today, Monday 2/22, at 6:00 PM PST, Dr. Valerie E. Taylor (Argonne Nat'l Lab) will present a Zoom talk entitled "Energy-Efficiency Tradeoffs for Parallel Scientific Applications" (free registeration).

2021/02/12: HW4 has been posted, as have links to Lectures 13 & 14. All lecture slides are now up to date for winter 2021. Lists of preliminary references submitted to me are all acceptable, with shortcomings in two cases communicated privately to the students involved. If you want to learn about quantum computing, Professor Kerem Camsari will present a talk entitled "Understanding Quantum Computing Through Negative Probabilities" on W 2/17, 6:30 PM PST. Here is the registration link for the free IEEE Central Coast Section Zoom event.

2021/02/06: No new lectures were posted this week, but I am working on Lectures 13-16, the final four for the course scheduled for February 24 to March 08, and hope to start posting them soon. HW4 will be posted before the 3-day Presidents' Day weekend. Hope you are making good progress on your research project and can use the week of February 15-19 ("research focus" week, with no lecture scheduled) to advance your research. For those of you who are interested, my spring-quarter graduate course on computer arithmetic (ECE 252B) has been scheduled on MW 12:00-1:50 and will be conducted on-line. The next IEEE Central Coast Section technical talk, "Understanding Quantum Computing through Negative Probabilities" (by Professor Kerem Camsari, UCSB), will be on Wednesday 2/17, 6:30 PM PST. You don't have to be an IEEE member to register for this free event. This lecture is highly relevant to our course, as there is great hope for quantum computing to provide ultra-high performance for solving some of the thorniest computational problems.

2021/02/01: Links to Lectures 10-12 have been posted within the "Course Calendar" section below. Lecture slides for Part III of the textbook have been updated for winter 2021.

2021/01/26: HW3 has been posted to the homework area below. Please note the correction to textbook and slides, which was posted yesterday.

2021/01/25: Correction to textbook Page 113 (and slide 35 in Part II'): In the fourth line of the parallel selection algorithm, change "divide S into p subsequences S(j) of size |S|/p" to "divide S into |S|/q subsequences S(j) of size q"

2021/01/22: Hope you are doing well despite the worsening pandemic and that you have been able to keep up with the progress of ECE 254B. A link to Lecture 7 has been posted within the "Course Calendar" section below. Lectures 8-9 are being prepared and will be posted within 2-3 days. Research topic assignments have been finalized and appear in the "Research Paper and Presentation" section below. Please keep the title provided for your research paper, that is, do not change the title or focus of your paper. This is important, as I will be sending these titles to "UCSB Reads 2021" program for inclusion in campus participation archives.

2021/01/16: Links to Lectures 5 & 6 have been posted within the "Course Calendar" section below. Lecture slides for Parts II' and II" have been updated for winter 2021. Homework 1 will be due by 10:00 AM on Monday 1/18. Homework 2 has already been posted. Your research topic preferences (first to third choices) are due by W 1/20, but please submit your preferences earlier if you can. I may be able to assign topics before the specified target date of 1/25, which will in turn give you more time to do research and write your paper. With my feedback on HW1, I will include solutions and a private link for you to access your copy of the "UCSB Reads 2021" book. The book is required reading for use in the section of your paper dealing with fairness/bias in ML algorithms.

[P.S.: UCSB talk of possible interest: Peng Gu, PhD defense, "Architecture Supports and Optimizations for Memory-Centric Processing System," Zoom Meeting: https://ucsb.zoom.us/j/7147136139; Meeting ID 714 713 6139; Passcode 123456]

2021/01/09: Links to Lectures 3 & 4 have been posted within the "Course Calendar" section below. I have acquired links for students to get free e-copies of the "UCSB Reads 2021" book for use in completing a required section of the term paper. I will send these links out via e-mail shortly.

2021/01/02: Link to Lectures 1 & 2 have been posted within the "Course Calendar" section below. I will post HW1 no later than Monday, January 04, a couple of days ahead of schedule.

2020/12/28: I have updated the research section of this Web page with new topics and references for winter 2021. Please take a look to familiarize yourselves with what will be required for the research component, which is worth 60% of your course grade.

2020/12/23: Welcome to the ECE 254B web page for winter 2021! As of today, six students have signed up for the course. I have sent out an introductory e-mail and will issue periodic reminders and announcements from GauchoSpace. However, my primary mode of communication about the course will be through this Web page. Make sure to consult this "Announcements" section regularly. Looking forward to e-meeting all of you!

Course Calendar

Course lectures and homework assignments have been scheduled as follows. This schedule will be strictly observed. In particular, no extension is possible for homework due dates; please start work on the assignments early. Each lecture covers topics in 1-2 chapters of the textbook. Chapter numbers are provided in parentheses, after day & date. PowerPoint and PDF files of the lecture slides can be found on the textbook's web page.

Day & Date (book chapters) Lecture topic [HW posted/due] {Research milestones}

M 01/04 (1) Introduction to parallel processing Lecture 1

W 01/06 (2) A taste of parallel algorithms [HW1 posted; chs. 1-4] Lecture 2

M 01/11 (3-4) Complexity and parallel computation models Lecture 3

W 01/13 (5) The PRAM shared-memory model and basic algorithms {Research topics issued} Lecture 4

M 01/18 No lecture: Martin Luther King Holiday [HW1 due] [HW2 posted; chs. 5-6C]

W 01/20 (6A) More shared-memory algorithms {Research topic preferences due} Lecture 5

M 01/25 (6B-6C) Shared memory implementations and abstractions {Research assignments} Lecture 6

W 01/27 (7) Sorting and selection networks [HW2 due] [HW3 posted; chs. 7-8C] Lecture 7

M 02/01 (8A) Search acceleration circuits Lecture 8

W 02/03 (8B-8C) Other circuit-level examples Lecture 9

M 02/08 (9) Sorting on a 2D mesh or torus architectures [HW3 due] Lecture 10

W 02/10 (10) Routing on a 2D mesh or torus architectures {Priliminary references due} Lecture 11

M 02/15 No lecture: President's Day Holiday [HW4 posted; chs. 9-12]

W 02/17 No lecture: Research focus week

M 02/22 (11-12) Other mesh/torus concepts Lecture 12

W 02/24 (13) Hypercubes and their algorithms [HW4 due] [HW5 posted; chs. 13-16] Lecture 13

M 03/01 (14) Sorting and routing on hypercubes {Final references & provisional abstract due} Lecture 14

W 03/03 (15-16) Other interconnection architectures Lecture 15

M 03/08 (17) Network embedding and task scheduling [HW5 due] Lecture 16

W 03/10 No lecture: Research focus {PDF of research poster due}

W 03/17 {Research paper PDF file due; include submitted poster as an appendix}

T 03/23 {Course grades due by midnight}

Homework Assignments

- Turn in solutions as a single PDF file attached to an e-mail sent by the due date/time.

- Turn in solutions as a single PDF file attached to an e-mail sent by the due date/time.

- Because solutions will be handed out on the due date, no extension can be granted.

- Include your name, course name, and assignment number at the top of the first page.

- If homework is handwritten and scanned, make sure that the PDF is clean and legible.

- Although some cooperation is permitted, direct copying will have severe consequences.

Homework 1: Introduction, complexity, and models (due M 2021/01/18, 10:00 AM)

Do the following problems from the textbook or defined below: 1.9, 1.16, 1.19, 2.6, 3.8, 4.5

1.16 Amdahl's law

A multiprocessor is built out of 5-GFLOPS processors. What is the maximum allowable sequential fraction of a job in Amdahl's formulation if the multiprocessor's performance is to exceed 1 TFLOPS?

1.19 Parallel processing effectiveness

Show that the asymptotic time complexity for the parallel addition of n numbers does not change if we use n/log2n processors instead of n/2 processors required to execute the computation graph of Fig. 1.14 in minimum time.

Homework 2: Shared-memory parallel processing (due W 2021/01/27, 10:00 AM)

Do the following problems from the textbook or defined below: 5.4, 5.8c, 6.3, 6.14 (corrected), 16.19, 16.25

6.14 Memory access networks (corrected statement)

Consider the butterfly memory access network depicted in Fig. 6.9, but with only 8 processors and 8 memory banks, one connected to each circular node in columns 0 and 3.

a. Show that there exist permutations that are not realizable.

b. Show that the shift-permutation, where processor i accesses memory bank i + k mod p, for some constant k, is realizable.

16.19 Chordal ring networks

Show that the diameter of a p-node, degree-4 chordal ring, with chords of length p^0.5, is p^0.5 – 1.

16.25 Hoffman-Singleton Graph

A Hoffman-Singleton graph is built from ten copies of a 5-node ring as follows. The rings are named P0-P4 and Q0-Q4. The nodes in each ring are labeled 0 through 4 and interconnected as a standard (bidirectional) ring. Additionally, node j of Pi is connected to node ki + 2j (mod 5) of Qk.

[According to Eric Weisstein's World of Mathematics, this graph was described by Robertson 1969, Bondy and Murty 1976, and Wong 1982, with other constructions given by Benson and Losey 1971 and Biggs 1993.]

a. Determine the node degree of the Hoffman-Singleton graph.

b. Determine the diameter of the Hoffman-Singleton graph.

c. Prove that the Hoffman-Singleton graph is a Moore graph.

d. Show that the Hoffman-Singleton graph contains many copies of the Petersen graph.

Homework 3: Circuit model of parallel processing (due M 2021/02/08, 10:00 AM)

Do the following problems from the textbook or defined below: 7.3, 7.7, 7.20, 8.6, 8.13, 8.21

7.20 Delay and cost of sorting networks

Let Cmin(n) and Dmin(n) be the minimal cost and minimal delay for an n-sorter constructed only of 2-sorters (the two lower bounds may not be realizable in a single circuit).

a. Prove or disprove: Dmin(n) is a strictly increasing function of n; i.e., Dmin(n + 1) > Dmin(n).

b. Prove or disprove: Cmin(n) is a strictly increasing function of n; i.e., Cmin(n + 1) > Cmin(n).

8.21 Parallel prefix networks

In the divide-and-conquer scheme of Fig. 8.7 for designing a parallel prefix network, one may observe that all but one of the outputs of the right block can be produced one time unit later without affecting the overall latency of the network. Show that this observation leads to a linear-cost circuit for k-input parallel prefix computation with ⌈log2k⌉ latency. Hint: Define type-x parallel prefix circuits, x ≥ 0, that produce their leftmost output with ⌈log2k⌉ latency and all other outputs with latencies not exceeding ⌈log2k⌉ + x, where k is the number of inputs. Write recurrences that relate Cx(k) for such circuits [Ladn80].

[Ladn80] Ladner, R.E. and M.J. Fischer, "Parallel Prefix Computation," Journal of the ACM, Vol. 27, No. 4, pp. 831-838, October 1980.

Homework 4: Mesh/torus-connected parallel computers (due W 2021/02/24, 10:00 AM)

Do the following problems from the textbook or defined below: 9.3, 9.9, 10.17, 11.2, 11.22, 12.2

10.17 Mesh routing for matrix transposition

An m × m matrix is stored on an m × m processor array, one element per processor. Consider matrix transposition (sending each element aij from processor ij to processor ji) as a packet routing problem.

a. Find the routing time and required queue size with store-and-forward row-first routing.

b. Discuss the routing time with column-first wormhole routing, assuming 20-flit packets.

11.22 Odd-even reduction

We used odd-even reduction method to solve a tridiagonal system of m linear equations on an m-processor linear array in asymptotically optimal O(m) time.

a. What would the complexity of odd-even reduction be on an m-processor CREW PRAM?

b. Can you make your algorithm more efficient by using fewer processors?

Homework 5: Hypercubic & other parallel computers (due M 2021/03/08, 10:00 AM)

Do the following problems from the textbook or defined below: 13.3, 13.17, 14.10, 15.7, 15.19, 16.7abc

13.17 Embedding multigrids and pyramids into hypercubes

a. Show that a 2D multigrid whose base is a 2^(q–1)-node square mesh, with q odd and q ≥ 5, and hence a pyramid of the same size, cannot be embedded in a q-cube with dilation 1.

b. Show that the 21-node 2D multigrid with a 4×4 base can be embedded in a 5-cube with dilation 2 and congestion 1 but that the 21-node pyramid cannot.

15.19 Routing on Benes networks

Show edge-disjoint paths for each of the following permutations of the inputs (0, 1, 2, 3, 4, 5, 6, 7) on an 8-input Benes network.

a. (6, 2, 3, 4, 5, 1, 7, 0)

b. (5, 4, 2, 7, 1, 6, 3, 0)

c. (1, 7, 3, 4, 2, 6, 0, 5)

Sample Exams and Study Guides (not applicable to winter 2021)

The following sample exams using problems from the textbook are meant to indicate the types and levels of problems, rather than the coverage (which is outlined in the course calendar). Students are responsible for all sections and topics, in the textbook, lecture slides, and class handouts, that are not explicitly excluded in the study guide that follows the sample exams, even if the material was not covered in class lectures.

Sample Midterm Exam (105 minutes) (Chapters 8A-8C do not apply to this year's midterm)

Textbook problems 2.3, 3.5, 5.5 (with i + s corrected to j + s), 7.6a, and 8.4ac; note that problem statements might change a bit for a closed-book exam.

Midterm Exam Study Guide

The following sections are excluded from Chapters 1-7 of the textbook to be covered in the midterm exam, including the three new chapters named 6A-C (expanding on Chpater 6):

3.5, 4.5, 4.6, 6A.6, 6B.3, 6B.5, 6C.3, 6C.4, 6C.5, 6C.6, 7.6

Sample Final Exam (150 minutes) (Chapters 1-7 do not apply to this year's final)

Textbook problems 1.10, 6.14, 9.5, 10.5, 13.5a, 14.10, 16.1; note that problem statements might change a bit for a closed-book exam.

Final Exam Study Guide

The following sections are excluded from Chapters 8A-19 of the textbook to be covered in the final exam:

8A.5, 8A.6, 8B.2, 8B.5, 8B.6, 9.6, 11.6, 12.5, 12.6, 13.5, 15.5, 16.5, 16.6, 17.1, 17.2, 17.6, 18.6, 19

Research Paper and Presentation

Our research focus this quarter will be on the topical area of machine learning. We will study the intersection of machine learning with high-performance computing, that is, how the meteoric spread of machine learning techniques affects the field of parallel/distributed computing and how research in the latter field can help solve the challenges brought about by machine-learning applications. To increase the level of novelty, we will combine the fields of parallel processing and machine learning with unconventional or emerging technologies to determine how high-performance computing solutions for machine learning will unfold once we go beyond standard hardware/software being used in today's implementations.

Our research focus this quarter will be on the topical area of machine learning. We will study the intersection of machine learning with high-performance computing, that is, how the meteoric spread of machine learning techniques affects the field of parallel/distributed computing and how research in the latter field can help solve the challenges brought about by machine-learning applications. To increase the level of novelty, we will combine the fields of parallel processing and machine learning with unconventional or emerging technologies to determine how high-performance computing solutions for machine learning will unfold once we go beyond standard hardware/software being used in today's implementations.

Here are a handful of general references on machine learning and associated hardware architectures.

[Jord15] M. I. Jordan and T. M. Mitchell, "Machine Learning: Trends, Perspectives, and Prospects," Science, Vol. 349, No. 6245, 2015, pp. 255-260.

[Shaf18] M. Shafique et al., "An Overview of Next-Generation Architectures for Machine Learning: Roadmap, Opportunities and Challenges in the IoT Era," Proc. Design Automation & Test Europe Conf., 2018, pp. 827-832.

[Kham19] A. Khamparia and K. M. Singh, "A Systematic Review on Deep Learning Architectures and Applications," Expert Systems, Vol. 36, No. 3, 2019, p. e12400.

Important concerns in machine learning have to do with direct & indirect impacts on humans: Fairness, bias, and security/reliability/safety. We will tie our studies into "UCSB Reads 2021" by having each student include a section entitled "Bias and Fairness Considerations" in his/her paper. A free copy of the "UCSB Reads 2021" selection, cited below, will be provided to each student. I have also included in the following an introductory reference on bias and fairness issues in machine learning.

[Khan18] P. Khan-Cullors and A. Bandele, When They Call You a Terrorist: A Black Lives Matter Memoir, St. Martin's Press, 2018 ("UCSB Reads 2021" book selection). [My book review on GoodReads]

[Mehr19] N. Mehrabi, F. Morstatter, N. Saxena, K. Lerman, and A. Galstyan, "A Survey of Bias and Fairness in Machine Learning," arXiv document 1908.09635, 2019/09/17.

Here is a list of research paper titles. In topic selection, you can either propose a topic of your choosing, which I will review and help you refine into something that would be manageable and acceptable for a term paper, or you can give me your first to third choices among the topics that follow by the "Research topic preferences due" date. I will then assign a topic to you within a few days, based on your preferences and those of your classmates. Sample references follow the titles to help define the topics and serve as starting points.

1. Parallel Processing in Machine Learning Using Tabular Schemes (Assigned to: TBD)

[Jian18] H. Jiang, L. Liu, P. P. Jonker, D. G. Elliott, F. Lombardi, and J. Han, J., "A High-Performance and Energy-Efficient FIR Adaptive Filter Using Approximate Distributed Arithmetic Circuits," IEEE Trans. Circuits and Systems I, Vol. 66, No. 1, 2018, pp. 313-326.

[Parh19] B. Parhami, "Tabular Computation," In Encyclopedia of Big Data Technologies, S. Sakr and A. Zomaya (eds.), Springer, 2019.

2. Parallel Processing in Machine Learning Using Neuromorphic Chips (Assigned to: Trenton Rochelle)

[Gree20] S. Greengard, "Neuromorphic Chips Take Shape," Communications of the ACM, Vol. 63, No. 8, pp. 9-11, July 2020.

[Sung18] C. Sung, H. Hwang, and I. K. Yoo, "Perspective: A Review on Memristive Hardware for Neuromorphic Computation," J. Applied Physics, Vol. 124, No. 15, 2018, p. 151903.

3. Parallel Processing in Machine Learning Using Biological Methods (Assigned to: Jennifer E. Volk)

[Bell19] G. Bellec, F. Scherr, E. Hajek, D. Salaj, R. Legenstein, and W. Maass, "Biologically Inspired Alternatives to Backpropagation Through Time for Learning in Recurrent Neural Nets," arXiv document 1901.09049, 2019/02/21.

[Purc14] O. Purcell and T. K. Lu, "Synthetic Analog and Digital Circuits for Cellular Computation and Memory," Current Opinion in Biotechnology, Vol. 29, October 2014, pp. 146-155.

4. Parallel Processing in Machine Learning Using FPGA Assist (Assigned to: Varshika Mirtini)

[Lace16] G. Lacey, G. W. Taylor, and S. Areibi, "Deep Learning on FPGAs: Past, Present, and Future," arXiv document 1602.04283, 2016/02/13.

[Menc20] O. Mencer et al., "The History, Status, and Future of FPGAs," Communications of the ACM, Vol. 63, No. 10, October 2020, pp. 36-39.

5. Parallel Processing in Machine Learning Using Photonic Accelerators (Assigned to: Guyue Huang)

[Kita19] K. I. Kitayama, M. Notomi, M. Naruse, K. Inoue, S. Kawakami, and A. Uchida, A., "Novel Frontier of Photonics for Data Processing—Photonic Accelerator," APL Photonics, Vol. 4. No. 9, 2019, p. 090901.

[Mehr18] A. Mehrabian, Y. Al-Kabani, V. J. Sorger, and T. El-Ghazawi, "PCNNA: A Photonic Convolutional Neural Network Accelerator," In Proc. 31st IEEE Int'l System-on-Chip Conf., 2018, pp. 169-173.

6. Parallel Processing in Machine Learning Using Reversible Circuits (Assigned to: TBD)

[Gari13] R. Garipelly, P. M. Kiran, and A. S. Kumar, "A Review on Reversible Logic Gates and Their Implementation," Int'l J. Emerging Technology and Advanced Engineering, Vol. 3, No. 3, March 2013, pp. 417-423.

[Saee13] M. Saeedi, and I. L. Markov, "Synthesis and Optimization of Reversible Circuits—A Survey," ACM Computing Surveys, Vol. 45, No. 2, 2013, pp. 1-34.

7. Parallel Processing in Machine Learning Using Neural Networks (Assigned to: Karthi N. Suryanarayana)

[Shar12] V. Sharma, S. Rai, and A. Dev, "A Comprehensive Study of Artificial Neural Networks," Int'l J. Advanced Research in Computer Science and Software Engineering, Vol. 2, No. 10, October 2012, pp. 278-284.

[Sze17] V. Sze, Y. H. Chen, T. J. Yang, and J. S. Emer, "Efficient Processing of Deep Neural Networks: A Tutorial and Survey," Proceedings of the IEEE, Vol. 105, No. 12, 2017, pp. 2295-2329.

8. Parallel Processing in Machine Learning Using Approximate Arithmetic (Assigned to: TBD)

[Jian17] H. Jiang, C. Liu, L. Liu, F. Lombardi, and J. Han, J., "A Review, Classification, and Comparative Evaluation of Approximate Arithmetic Circuits, ACM J. Emerging Technologies in Computing Systems, Vol. 13, No. 4, 2017, pp. 1-34.

[Jian20] H. Jiang, F. J. Santiago, H. Mo, L. Liu, and J. Han, "Approximate Arithmetic Circuits: A Survey, Characterization, and Recent Applications," Proceedings of the IEEE, Vol. 108, No. 12, 2020, pp. 2108-2135.

9. Parallel Processing in Machine Learning Using Stochastic Computation (Assigned to: TBD)

[Alag13] A. Alaghi and J. P. Hayes, "Survey of Stochastic Computing," ACM Trans. Embedded Computing Systems, Vol. 12, No. 2s, May 2013, pp. 1-19.

[Alag17] A. Alaghi, W. Qian, and J. P. Hayes, "The Promise and Challenge of Stochastic Computing," IEEE Trans. Computer-Aided Design of Integrated Circuits and Systems, Vol. 37, No. 8, November 2017, pp. 1515-1531.

10. Parallel Processing in Machine Learning Using Analog/Digital Components (Assigned to: Boning Dong)

[Hasl20] J. Hasler, "Large-Scale Field-Programmable Analog Arrays," Proceedings of the IEEE, Vol. 108, No. 8, August 2020, pp. 1283-1302.

[Lei19] T. Lei et al., "Low-Voltage High-Performance Flexible Digital and Analog Circuits Based on Ultrahigh-Purity Semiconducting Carbon Nanotubes," Nature Communications, Vol. 10, No. 1, 2019, pp. 1-10.

11. Parallel Processing in Machine Learning Using Graph Neural Networks (Assigned to: Zhaodong Chen)

[Dakk19] Dakkak, A., C. Li, J. Xiong, I. Gelado, and W. M. Hwu, "Accelerating Reduction and Scan Using Tensor Core Units," Proc. ACM Int'l Conf. Supercomputing, 2019, pp. 46-57.

[Raih19] Raihan, M. A., N. Goli, and T. M. Aamodt, "Modeling Deep Learning Accelerator Enabled GPUs," Proc. Int'l Symp. Performance Analysis of Systems and Software, 2019, pp. 79-92.

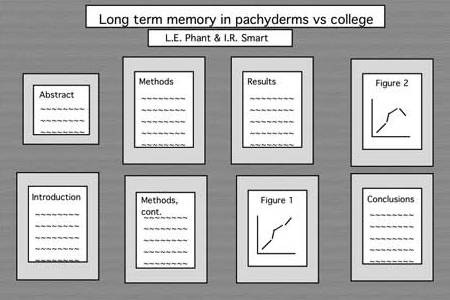

Poster Preparation and Presentation Tips

Here are some guidelines for preparing your research poster. The idea of the poster is to present your research results and conclusions thus far, get oral feedback during the session from the instructor and your peers, and to provide the instructor with something to comment on before your final report is due (no presentation for winter 2021, so the instructor feedback will be written). Please send a PDF copy of the poster via e-mail by midnight on the poster due date.

Here are some guidelines for preparing your research poster. The idea of the poster is to present your research results and conclusions thus far, get oral feedback during the session from the instructor and your peers, and to provide the instructor with something to comment on before your final report is due (no presentation for winter 2021, so the instructor feedback will be written). Please send a PDF copy of the poster via e-mail by midnight on the poster due date.

Posters prepared for conferences must be colorful and eye-catching, as they are typically competing with dozens of other posters for the attendees' attention. Here are some tips and examples. Such posters are often mounted on a colored cardboard base, even if the pages themselves are standard PowerPoint slides. You can also prepare a "plain" poster (loose sheets, to be taped or pinned to the a wall) that conveys your message in a simple and direct way. Eight to 10 pages, each resembling a PowerPoint slide, would be an appropriate goal. You can organize the pages into 2 x 4 (2 columns, 4 rows), 2 x 5, or 3 x 3 array on the wall. The top two of these might contain the project title, your name, course name and number, and a very short (50-word) abstract. The final two can perhaps contain your conclusions and directions for further work (including work that does not appear in the poster, but will be included in your research report). The rest will contain brief description of ideas, with emphasis on diagrams, graphs, tables, and the like, rather than text which is very difficult to absorb for a visitor in a short time span.

Grade Statistics

All grades listed below are in [0.0, 4.3] (F to A+), unless otherwise noted

All grades listed below are in [0.0, 4.3] (F to A+), unless otherwise noted

HW1 grades: Range = [B+, A+], Mean = 3.6, Median = B+

HW2 grades: Range = [C, A], Mean = 3.2, Median = B+

HW3 grades: Range = [B+, A], Mean = 3.8, Median = ~A

HW4 grades: Range = [B, A], Mean = 3.5, Median = B+/A–

HW5 grades: Range = [B–, A], Mean = 3.6, Median = ~A

Overall homework grades (percent): Range = [85, 102], Mean = 89, Median = 87

Research paper/poster grades (percent): Range = [70, 95], Mean = 83, Median = 83

Course letter grades: Range = [B, A], Mean = 3.6, Median = A–

References

Required text: B. Parhami, Introduction to Parallel Processing: Algorithms and Architectures, Plenum Press, 1999. Make sure that you visit the textbook's web page which contains an errata. Lecture slides are also available there.

Optional recommended book: T. Rauber and G. Runger, Parallel Programming for Multicore and Cluster Systems, 2nd ed., Springer, 2013. Because ECE 254B's focus is on architecture and its interplay with algorithms, this book constitutes helpful supplementary reading.

On-line: N. Matloff, Programming Parallel Machines: GPU, Multicore, Clusters, & More. [Link]

Research resources:

The follolwing journals contain a wealth of information on new developments in parallel processing: IEEE Trans. Parallel and Distributed Systems, IEEE Trans. Computers, J. Parallel & Distributed Computing, Parallel Computing, Parallel Processing Letters, ACM Trans. Parallel Computing. Also, see IEEE Computer and IEEE Concurrency (the latter ceased publication in late 2000) for broad introductory articles.

The following are the main conferences of the field: Int'l Symp. Computer Architecture (ISCA, since 1973), Int'l Conf. Parallel Processing (ICPP, since 1972), Int'l Parallel & Distributed Processing Symp. (IPDPS, formed in 1998 by merging IPPS/SPDP, which were held since 1987/1989), and ACM Symp. Parallel Algorithms and Architectures (SPAA, since 1988).

UCSB library's electronic journals, collections, and other resources

Miscellaneous Information

Motivation: The ultimate efficiency in parallel systems is to achieve a computation speedup factor of p with p processors. Although often this ideal cannot be achieved, some speedup is generally possible by using multiple processors in a concurrent (parallel or distributed) system. The actual speed gain depends on the system's architecture and the algorithm run on it. This course focuses on the interplay of architectural and algorithmic speedup techniques. More specifically, the problem of algorithm design for "general-purpose" parallel systems and its "converse," the incorporation of architectural features to help improve algorithm efficiency and, in the extreme, the design of algorithm-based special-purpose parallel architectures, are dealt with. The foregoing notions will be covered in sufficient detail to allow extensions and applications in a variety of contexts, from network processors, through desktop computers, game boxes, Web server farms, multiterabyte storage systems, and mainframes, to high-end supercomputers.

Catalog entry: 254B. Advanced Computer Architecture: Parallel Processing(4) PARHAMI. Prerequisites: ECE 254A. Lecture, 4 hours. The nature of concurrent computations. Idealized models of parallel systems. Practical realization of concurrency. Interconnection networks. Building-block parallel algorithms. Algorithm design, optimality, and efficiency. Mapping and scheduling of computations. Example multiprocessors and multicomputers.

History: The graduate course ECE 254B was created by Dr. Parhami, shortly after he joined UCSB in 1988. It was first taught in spring 1989 as ECE 594L, Special Topics in Computer Architecture: Parallel and Distributed Computations. A year later, it was converted to ECE 251, a regular graduate course. In 1991, Dr. Parhami led an effort to restructure and update UCSB's graduate course offerings in the area of computer architecture. The result was the creation of the three-course sequence ECE 254A/B/C to replace ECE 250 (Adv. Computer Architecture) and ECE 251. The three new courses were designed to cover high-performance uniprocessing, parallel computing, and distributed computer systems, respectively. In 1999, based on a decade of experience in teaching ECE 254B, Dr. Parhami published the textbook Introduction to Parallel Processing: Algorithms and Architectures (Website).

Offering of ECE 254B in winter 2020 (Link)

Offering of ECE 254B in winter 2019 (Link)

Offering of ECE 254B in winter 2018 (Link)

Offering of ECE 254B in winter 2017 (Link)

Offering of ECE 254B in winter 2016 (PDF file)

Offering of ECE 254B in winter 2014 (PDF file)

Offering of ECE 254B in winter 2013 (PDF file

Offering of ECE 254B in fall 2010 (PDF file)

Offering of ECE 254B in fall 2008 (PDF file)

Offerings of ECE 254B from 2000 to 2006 (PDF file)